With its sense of touch, Stanford’s OceanOne android can assist with recovery efforts on sea and land

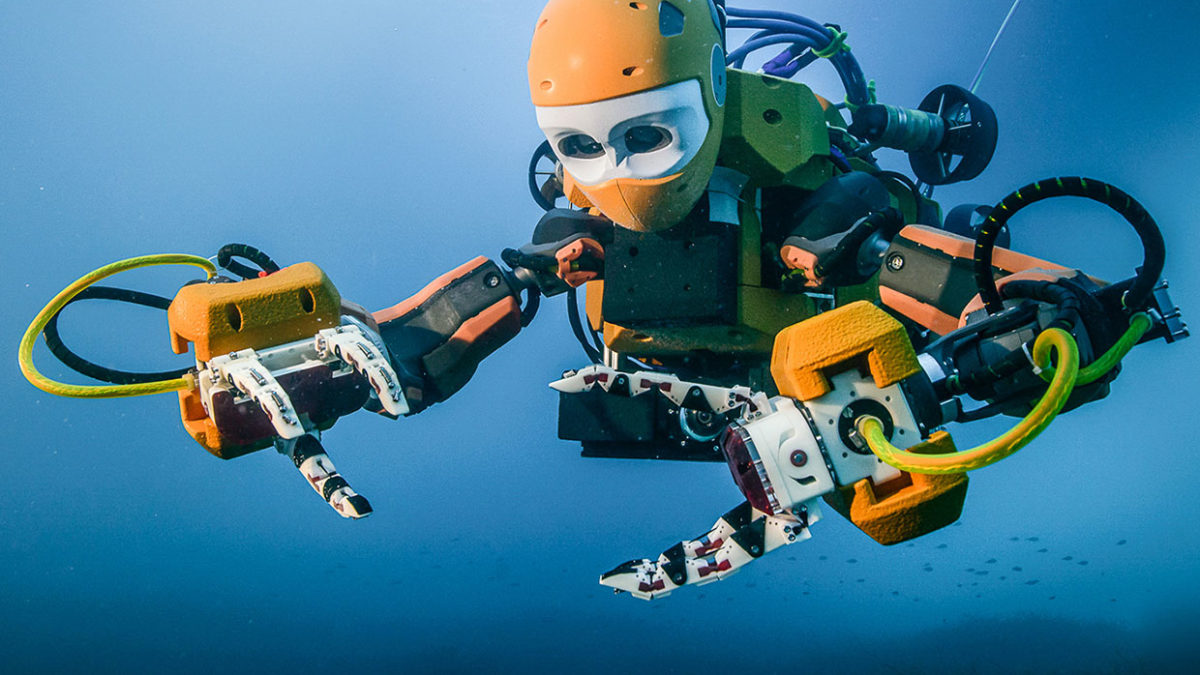

A diving android invented by IEEE Life Fellow Oussama Khatib could someday take over jobs too dangerous for humans. OceanOne [above] was built at Stanford where Khatib is a professor of computer science.

On its first trial in 2016, OceanOne dove 100 meters below the surface of the Mediterranean Ocean to retrieve a grapefruit-size vase from the wreckage of La Lune, a 17th-century sailing vessel. The ship lies beyond the maximum safe diving depth for a scuba diver of around 40 meters. Khatib sat in a boat on the surface and used haptic controls to direct the robot and maneuver its limbs.

“By using haptics and artificial intelligence, we are talking about all sorts of applications where humans and machines could collaborate on challenging tasks,” Khatib told attendees about the expedition at a panel discussion about robots during the IEEE Vision Innovations Challenges Summit, held in San Francisco in May. “Underwater work is not the only thing we have in mind for OceanOne.”

Haptically operated androids could do tasks too dangerous for humans, Khatib says. They could give ecologists a hand, for example, by collecting samples of coral and sediment from the ocean floor.

And once remotely operated androids are designed to walk and crawl, Khatib foresees jobs on land, too. They could perform disaster recovery operations in mountains and caves, he suggests, as well as working in mines and on drilling operations.

“Think about how much we could do,” he says, “if we were able to physically remove humans from such dangerous conditions.”

MOBILE MANIPULATION

La Lune was a prized frigate of King Louis XIV’s French fleet when it sank for unknown reasons 32 kilometers off Toulon, on the country’s southeast coast, in 1664 during its return from North Africa, where its crew fought off pirates. The ruin was discovered only 20 years ago.

Khatib originally designed OceanOne, which can reach depths of 2,000 meters, for researchers studying deep coral reefs in the Red Sea.

The android is about 1.5 meters long and weighs 260 kilograms. Its eyes consist of two wide-angle cameras that give it stereoscopic vision so that a human pilot controlling it from a ship above can see what the robot sees.

Video: StanfordIEEE Life Fellow Oussama Khatib describes the technology used in OceanOne.

Batteries, computers, and eight multidirectional thrusters are housed in a tail section. Sensors throughout the robot gauge current and turbulence, automatically activating the thrusters when needed. The motors adjust the arms to keep the android’s hands steady as it works. If it senses that its thrusters won’t slow it down quickly enough, the android can quickly brace for impact with its arms.

To make further sense of its underwater environment, OceanOne is fitted with sensors in two articulated claws in each hand that give it a sense of touch. What the bot “feels”—the haptic sense—is relayed back to the pilot through a pair of joysticks that he holds in his hands.

If, for example, the robot’s mission is to retrieve a delicate item from the seabed, the haptic feedback along with images from the cameras let the operator determine whether the object is heavy or light. The operator then lets the android know how strong to grip the item so as not to damage it.

“Whatever the robot touches with its ‘hands,’ the operator sitting in the boat feels in his hands via the joysticks,” Khatib says. “This haptics interface connects the human with the machine. The robot can feel the environment—which allows the human to interact with it. Problems can be handled that only a human’s intuition can solve.